新博客地址

欢迎大家访问

保福寺研究僧

The Github contest ended last week-end. Liang Xiang (xlvector) and I

cooperated on an entry that took first place.

We win a bottle of aged Pappy Van Winkle, a large github account and

bragging rights among our fellow geeks.

Scott Chacon asked me to write up about the algorithms we used; that's

now in the project's README.

I learned 3 more lessons from the Github contest.

Diversity

While our main focus was improving our score, we did stumble on one

interesting avenue for future research.

Imagine a user who watches Rails projects for work, and Haskell

related ones for fun. Treating that user as 2 distinct users should

outperform the simpler approach. Using a kNN algorithm with too large

a value of k and 2+ very different interest clusters would guarantee

poor performance.

Given the time constraint we decided to just increase recommendation diversity.

Ensembles win, but require preparation

Netflix taught us that ensembles win. Ilya Grigorik submitted an entry

exploiting that fact and wrote about it Collaborative Filtering with

Ensembles.

The ideal co-operation scenario would have involved participants using

the same training data and result file formats. Had I realized that

sooner, the winning entry would have included Jeremy's results.

Overfitting

Avoiding over-fitting is usually very easy, and early on we decided to

test locally on our own training data subset and test file. Ideally,

we would have had time to do the same thing with each of the

heuristics and data sources we were using. Not doing so ironically

resulted in over-emphasizing those weights in our blending, thereby

underfitting the test set.

Thanks to Scott and Github for giving us a challenge between Netflix contests.

A user buy a book because he/she like it. So, why he give low rating

to the book after he read it? This may because this book is not

satisfy this users interest. So, this user like most of the

properities of the book (he may like the topic, the author, or this

book is recommended by one of his best friend), if not, he will not

buy this book, but one of two disadvantage of this book may make he

give low rating to this book.

Above words seem like common sense. But we can draw one conclusion

from this example, if a user give high rating to a book, he may like

all of the properities the book, but if a user give low rating to a

book, he may only hate part of the book because if he hate all parts

of the book, he will never buy it.

嘿嘿,这个页面可以下载MP3,我听了一遍,基本上听懂了,看来我的听力这几年进步的挺大。嘿嘿。

采访中的3个人,在Ensemble中都是很活跃的,我们合作的很好。

这个想法在Github中很有作用,我一般是通过极值的方法来去掉用户不喜欢的东西。

举一个例子,比如一个用户watch了很多工程,但他watch的工程中最popular的工程的popular程度为A,那么我们可以认为,这个用户再watch超过这个popularity的可能性会很低。

再举一个例子,一个用户watch了很多工程,这些工程都是2006年之后创建的,那么我们可以认为,这个用户watch 2006年前的工程的可能性也很低。

或者,一个用户watch了很多工程,但这些工程都是小程序,行数只有几百行,那么也可以认为,这个用户watch大工程的可能性也很低

可以举出很多这样的例子,每用一种极值,对结果的提高都有好处。不过,这些方法只对watch了很多工程的用户才有作用,因为只有他们的极值是比较可靠的(置信概率比较高)

我的Github Contest解决方案 : item-based KNN

item-based KNN是top-K推荐问题中用的最广泛的一个方法,他的相关论文有

Item-based collaborative filtering recommendation algorithms

Item-based top-n recommendation algorithms

Amazon. com recommendations: Item-to-item collaborative filtering

在github contest里面,我首先使用了item-based KNN,不过具体的实现细节和前面几篇论文不太一样,主要有下面几点

1) 如果两个工程被同一个用户watch过,那这个用户肯定给这两个工程贡献一定的相似度。在传统的相似度计算中,不同的用户贡献相似度的能力是相同的,不过我们考虑两个用户,一个看了100个工程,一个只看过两个工程,那么看过2个工程的用户贡献的相似度应该要高于看过100个工程的用户。(这个效应被称为inverse

user frequence,是和信息检索中的idf相对应的)

2) 推荐过程,对于一个用户,我们找出他曾经watch过的所有工程,然后对每个工程找出和他相似的工程,从而找出这个用户没有watch过得,但是和他watch过的工程最相似的工程。比如一个工程j,一个用户u,那么u对j的喜欢程度定义为

p(u,j) = sum_{i in N(u)} w(i,j)

这里的w(i.j)就是i和j两个工程的相似度,N(u)是u

watch过的所有工程。因为w(i,j)是线性相关系数,在我的实现中,我对w(i,j)进行了平方,这样的目的主要是削弱小相似度的影响,因为w(i,j)是大于0小于1的

Oddly enough "what did I learn?" is also the question that I most frequently ask myself, and so far I haven't come up with any sort of gestalt answer. I find this comforting. A pat or encapsulated answer tends to trivialize any endeavor. Of course the more enlightened press seems to want to grab hold of the "big concept" ideas: crowdsourcing, contest-motivated problem solving, new paradigms for business analytics, that sort of thing. While those are important, and certainly make more interesting copy than explanations of why better movie rating predictions are good for Netflix, they don't offer any insight into the more personal explorations that contest participants undoubtedly experienced.

So, for better or worse, I'm going to try to cover some of what I, personally, learned. This will obviously take more than a single blog entry, and I have no doubt that some of my "revelations" will seem simple and obvious (possibly stupid), while others may seem more like heresy. There's not much I can do about that. After all, the only public opinion prediction engine I have available is incapable of doing more than making wild guesses – I refer, of course, to the squishy pattern matcher between my ears.

如果我们把watch4个以下的用户称为非活跃,反之称为活跃。那么现在的实验表示,对于非活跃用户有60%的预测准确率,而活跃用户只有40%。

这个原因是,活跃用户实际有很多潜在可能watch的工程,而比赛只要我们猜出1个,这个就像大海捞针,是比较困难的。这个时候diversity就

起

作用了。

我现在只考虑了简单的方法,对于同一类的,给活跃用户推荐时,只选择前几个,后面的降低权重,这个方法目前是很有用的。

这个也说明,diversity在预测时也是很重要的。

第一种用户,买了很多书,但大都是畅销书,这种用户似乎是大多数人

还有一种用户,也买了很多书,但大都是很偏的书,这种用户似乎是某方面的学者,比如我以前在豆瓣查很多数学方面的书都非常偏,很多连封面都没有

This is Neil Hunt, Chief Product Officer at Netflix.

To everyone who participated in the Netflix Prize: You've made this a truly remarkable contest and you've brought great innovation to the field. We applaud you for your contributions and we hope you've enjoyed the journey. We look forward to announcing a winner of the $1M Grand Prize in late September.

And, like so many great movies, there will be a sequel.

The advances spurred by the Netflix Prize have so impressed us that we’re planning Netflix Prize 2, a new big money contest with some new twists.

Here’s one: three years was a long time to compete in Prize 1, so the next contest will be a shorter time limited race, with grand prizes for the best results at 6 and 18 months.

While the first contest has been remarkable, we think Netflix Prize 2 will be more challenging, more fun, and even more useful to the field.

Stay tuned for more details when we announce the winners of Prize 1 in September.

很期待Netflix的下一场比赛,希望能公布更多的数据,以及更有挑战力的问题。

在设计实际的推荐系统时,我们不可能计算一个用户对所以电影的评分,然后排序,找出topK。在BellKor的论文中,他用TopK评测预测问题时, 是随机选出1000个电影,然后评分排序,得出TopK。

实际的系统中,我们需要用binary data首先找出一个候选集,这个过程其实是TopK的过程(这个过程其实不需要评分,只需要关系0-1矩 阵),然后我们计算用户对候选集中电影的评分,然后对候 选集用评分排序。所以说,topk和netflix其实不是一个问题,而是推荐系统中两个不同的问题,所以用不同的评测方法也是应该的。

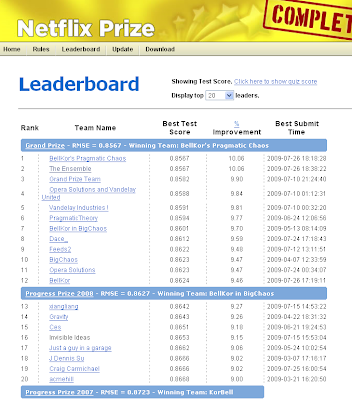

A contest set up by Netflix, which offered a $1 million prize to anyone who could significantly improve its movie recommendation system, ended on Sunday with two teams in a virtual dead heat, and no winner to be declared until September.

But the contest, which began in October 2006, has already produced an impressive legacy. It has shaped careers, spawned at least one start-up company and inspired research papers. It has also changed conventional wisdom about the best way to build the automated systems that increasingly help people make online choices about movies, books, clothing, restaurants, news and other goods and services.

These so-called recommendation engines are computing models that predict what a person might enjoy based on statistical scoring of that person’s stated preferences, past consumption patterns and similar choices made by many others — all made possible by the ease of data collection and tracking on the Web.

“The Netflix prize contest will be looked at for years by people studying how to do predictive modeling,” said Chris Volinsky, a scientist at AT&T Research and a leader of one of the two highest-ranked teams in the competition.

The biggest lesson learned, according to members of the two top teams, was the power of collaboration. It was not a single insight, algorithm or concept that allowed both teams to surpass the goal Netflix, the movie rental company, set nearly three years ago: to improve the movie recommendations made by its internal software by at least 10 percent, as measured by predicted versus actual one-through-five-star ratings by customers.

Instead, they say, the formula for success was to bring together people with complementary skills and combine different methods of problem-solving. This became increasingly apparent as the contest evolved. Mr. Volinsky’s team, BellKor’s Pragmatic Chaos, was the longtime front-runner and the first to surpass the 10 percent hurdle. It is actually a seven-person collection of other teams, and its members are statisticians, machine learning experts and computer engineers from the United States, Austria, Canada and Israel.

When BellKor’s announced last month that it had passed the 10 percent threshold, it set off a 30-day race, under contest rules, for other teams to try to best it. That led to another round of team-merging by BellKor’s leading rivals, who assembled a global consortium of about 30 members, appropriately called the Ensemble.

Submissions came fast and furious in the last few weeks from BellKor’s and the Ensemble. Just minutes before the contest deadline on Sunday, the Ensemble’s latest entry edged ahead of BellKor’s on the public Web leader board — by one-hundredth of a percentage point.

“The contest was almost a race to agglomerate as many teams as possible,” said David Weiss, a Ph.D. candidate in computer science at the University of Pennsylvania and a member of the Ensemble. “The surprise was that the collaborative approach works so well, that trying all the algorithms, coding them up and putting them together far exceeded our expectations.”

The contestants evolved, it seems, along with the contest. When the Netflix competition began, Mr. Weiss was one of three seniors at Princeton University, including David Lin and Lester Mackey, who made up a team called Dinosaur Planet. Mr. Lin, a math major, went on to become a derivatives trader on Wall Street.

But Mr. Mackey is a Ph.D. candidate at the Statistical Artificial Intelligence Lab at the University of California, Berkeley. “My interests now have been influenced by working on the Netflix prize contest,” he said.

Software recommendation systems, Mr. Mackey said, will increasingly become common tools to help people find useful information and products amid the explosion of information and offerings competing for their attention on the Web. “A lot of these techniques will propagate across the Internet,” he predicted.

That is certainly the hope of Domonkos Tikk, a Hungarian computer scientist and a member of the Ensemble. Mr. Tikk, 39, and three younger colleagues started working on the contest shortly after it began, and in 2007 they teamed up with the Princeton group. “When we entered the Netflix competition, we had no experience in collaborative filtering,” Mr. Tikk said.

Yet based on what they learned, Mr. Tikk and his colleagues founded a start-up, Gravity, which is developing recommendation systems for commercial clients, including e-commerce Web sites and a European cellphone company.

Though the Ensemble team nudged ahead of BellKor’s on the public leader board, it is not necessarily the winner. BellKor’s, according to Mr. Volinsky, remains in first place, and Netflix contacted it on Sunday to say so.

And in an online forum, another member of the BellKor’s team, Yehuda Koren, a researcher for Yahoo in Israel, said his team had “a better test score than the Ensemble,” despite what the rival team submitted for the leader board.

So is BellKor’s the winner? Certainly not yet, according to a Netflix spokesman, Steve Swasey. “There is no winner,” he said.

A winner, Mr. Swasey said, will probably not be announced until sometime in September at an event hosted by Reed Hastings, Netflix’s chief executive. The movie rental company is not holding off for maximum public relations effect, Mr. Swasey said, but because the winner has not yet been determined.

The Web leader board, he explained, is based on what the teams submit. Next, Netflix’s in-house researchers and outside experts have to validate the teams’ submissions, poring over the submitted code, design documents and other materials. “This is really complex stuff,” Mr. Swasey said.

In Hungary, Mr. Tikk did not sound optimistic. “We didn’t get any notification from Netflix,” he said in a phone interview. “So I think the chances that we won are very slight. It was a nice try.”