Introduction

Composition Image I is generated by foreground and background with alpha matte

I = alpha*F + (1-alpha)*B

Matting is a problem to get alpha,F,B from a given image I.

User have to devide the image into three region: Foreground, Background and Unknown area. In foreground area, F = I, alpha = 1, B = 0; In background area, F = 0, alpha = 0, B = I. Our task is to get F,B,alpha in unknown area.

Consider a point P in unknown area, we have to first estimate F and B in point P.

After estimate F,B in point P, we estimate alpha by minimize a energy:

V = a*V(P) + b*V(P,Q) Q is 4-neighbor of P

V(P) = C - alpha*F - (1-alpha)*B^2

V(P,Q) = alpha(P) - alpha(Q)^2

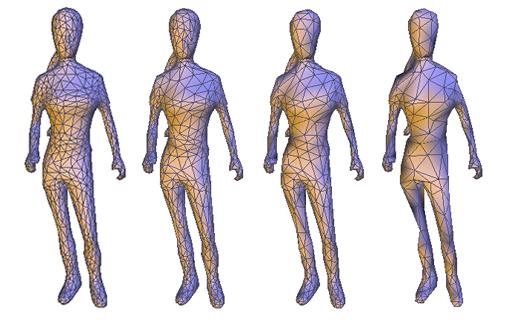

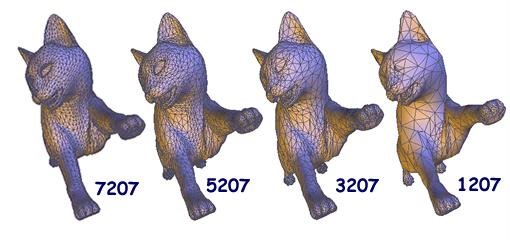

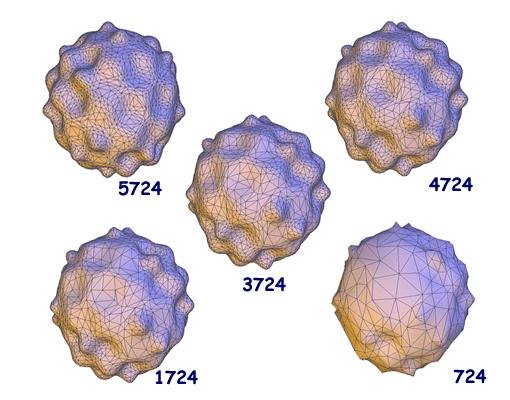

Result

Reference (All can be downloaded from Google)

- Y.Chuang, B.Curless, DH.Salesin, R.Szeliski, A Bayesian Approcah to Digital Matting.

- J.Sun, J.Jia, CK.Tang, HY.Shum, Poisson Matting.

- A.Levin, D.Lischinski, Y.Weiss, A Closed Form Solution to Natural Image Matting.

- J.Wang, MF.Cohen, An Iterative Optimization for Unified Image Segmentation and Matting.